By: Sabine Salnave

Hi! My name is Sabine, and I spent a summer at Project Reclass as a Cloud Infrastructure Intern. By the end of my internship, I was able to Dockerize and deploy several applications to a K3s cluster for Project Reclass’ annual Hackday event, all without prior cloud infrastructure knowledge! Here’s how I got to that point.

When I was looking for a way to spend the summer after my first year in university, I was drawn to Project Reclass for a number of reasons. As a computer science major, I was excited about exploring various parts of the technology field to see what kinds of things I’d be interested in; when I saw that Project Reclass was offering the opportunity to work alongside them while gaining experience in cloud infrastructure, I was eager to try it out. In addition, I knew that my work for this organization would allow me to help a cause I believed in: decreasing recidivism among incarcerated individuals. As a result, I was excited to be brought on to their summer internship team. The goal of the internship was to create a demonstration for Hackday, an annual event run by Project Reclass to demonstrate what the organization had been working towards all year and attract volunteers to help the cause. My job would be to create a Kubernetes cluster and demonstrate its resilience to model the technology that Project Reclass would be using in its deployment of Toynet, a network emulator built to teach computer networking principles.

There was only one issue with that—before coming to Project Reclass, I had no knowledge about cloud technologies! This meant that I’d have to do research to understand what the task was, let alone how to do it. My first task required me to learn how to create an EC2 instance using Amazon Web Services (AWS), so I began my research by searching Google for information about EC2 instances. I quickly learned that the official AWS documentation was the best source of information; general Google searches were more useful for specific problems that the documentation didn’t cover. It was through the documentation that I learned that an EC2 instance is a virtual computing environment in the cloud that you can allocate computing resources to (such as storage, processing power, and memory). You can use an instance to download programs and run applications just as you would on a physical machine, but without having to purchase hardware. With that knowledge, I was able to use AWS to build an EC2 instance, create a private network to put it in for security, create an SSH key pair to log in to the instance and register the instance on a stack in AWS Opsworks so any user on the Project Reclass AWS account could use their SSH credentials to log in. I uploaded a test file to verify that everything worked—and it did!

The next step in the process had me working closely with Josiah, another intern for the summer, to learn two technologies: Docker and Kubernetes. Docker, as we learned, helps to build and run containers, which are packages containing applications and their necessary dependencies. Containers are lightweight and portable, making it easy to create and deploy programs on different platforms.

We used Docker to containerize two applications: Chatback, a chatroom application built by another member of the team that users could send messages in, and a chatbot that Josiah had created to send messages to Chatback. These programs were to be used in our demo as a stand-in for Toynet, since they were much simpler and easier to understand. We were a bit confused when it came to creating a Dockerfile (the blueprint used by Docker to build containers) for the chatbot, but with a bit of help from our mentor, Theo, we were able to build and run Chatback and the chatbot in an EC2 instance.

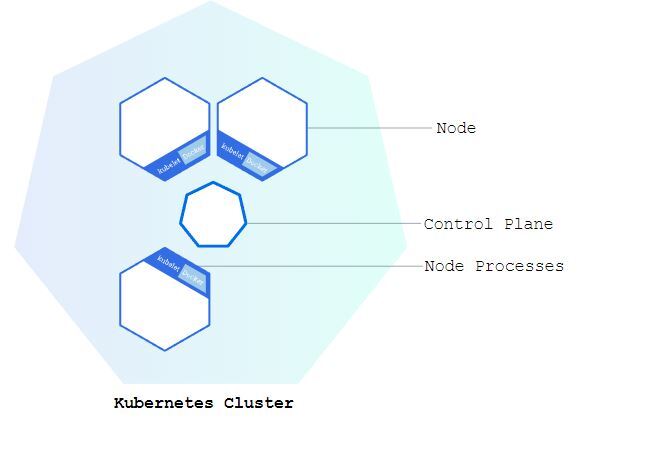

We then moved on to Kubernetes, a tool used to manage containerized applications within a cluster (consisting of worker nodes that house the containers in units called “pods”, and a master node that communicates with the worker nodes to allocate resources). Working with Kubernetes was more difficult than we had anticipated; once we had finally created and connected three EC2 instances to function as nodes in a cluster, we tried running the Chatback application as we had before and found that it wasn’t working.

For some odd reason, the containers that made up Chatback were ending up on different nodes, and as a result, they were unable to communicate with one another. We determined that the issue was with the pod network, the method by which the containers were to communicate. However, we were running low on time, so we didn’t have time to finish troubleshooting. We did find a solution, however: K3s.

K3s is functionally similar to Kubernetes, but with a few key differences. The binary used to deploy it is much smaller than that used for Kubernetes, so K3s is more lightweight. In addition, Kubernetes has multiple parts that need to be downloaded separately in order to build the cluster while K3s has a single download script that you can run on all of your nodes to create a cluster in no time. The download script for K3s includes the pod network that the containers need to communicate through, so by using K3s for our demo instead of K8s we were able to circumvent our previous issue. There are many instances where it would be better to use Kubernetes instead of K3s; for instance, K3s can’t work in multiple cloud environments simultaneously as Kubernetes can, and larger applications are much better suited to be run in a full Kubernetes environment rather than with K3s. However, for our demonstration, we would only be operating in a single cloud environment, and the Chatback and chatbot applications weren’t very big, so K3s served our purposes better. We used YAML files to deploy the Chatback and chatbot on the cluster and verified that the bots could interact with Chatback once deployed.

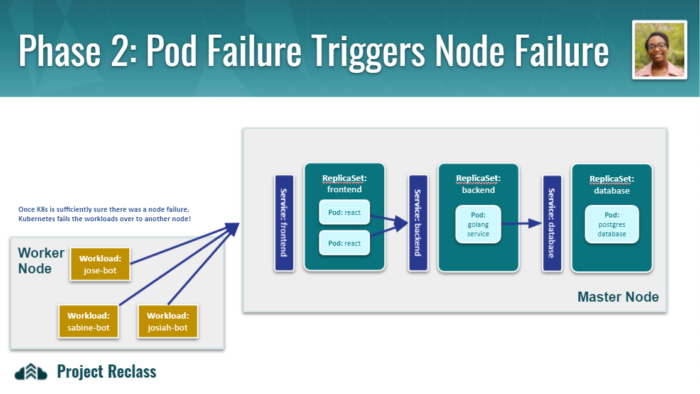

Once we had a functional, three-node K3s cluster hosted on three EC2 instances (with one master node and two worker nodes) that could run Chatback and multiple chatbots, we had one more task to accomplish before we were prepared for the demonstration: inducing failures. The point of the demonstration was to demonstrate how Kubernetes is useful in keeping applications running by replacing resources that go down. The plan was originally to make use of Datadog’s chaos controller, which used a YAML file to select resources at either the pod level or the node level to bring down. However, we realized that the chaos controller didn’t work as well with K3s as it did with Kubernetes. We tried to troubleshoot the issue to determine why pods we were selecting weren’t going down by interpreting the controller logs and digging through the documentation on Github, but we ultimately decided that we didn’t have enough time to debug the chaos controller before the demo. We solved the issue by pivoting to a manually induced failure, rather than one that was automated. We used different commands to first delete a pod, then clear a node. Then, we verified that Kubernetes was able to bring the resources back—which it was!

On Hackday, we were finally able to present what we had accomplished. In addition to a brief presentation on the usefulness of containerization, Josiah and I demonstrated how the chatbots were able to communicate with Chatback, then brought down both a pod hosting a bot and an entire node live to show the audience how Kubernetes is able to recover lost resources. Most importantly, we tied it back to the mission—just as Kubernetes was able to deploy and recover our Chatback and chatbots with ease, it would also be able to easily deploy Toynet for multiple users and would recover quickly if something went wrong.

I’m incredibly proud of how much we were able to accomplish that summer; not only did we create and run a successful demonstration of Kubernetes during Hackday, but I was able to do it despite having no experience with any cloud technologies before. Thanks to my work with Project Reclass, I now have a good understanding of how to use Docker and leverage AWS resources to deploy applications, and can’t wait to utilize that knowledge with my personal projects in the future. Just goes to show what you can do when you take a chance on something new!

The Author